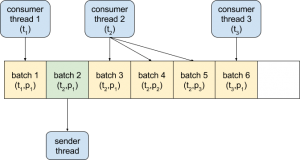

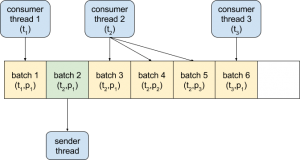

The StockSender is Runnable and runs in its own thread. What is end to end batching and compression and how do you enable it? This ack=all setting is the strongest available guarantee that Kafka provides for durability. It has a main() method that creates the producer, creates a StockSender list passing each instance the producer, and it creates a thread pool, so every stock sender gets it own thread, and then it runs each stockSender in its own thread using the thread pool. costs can be related to disk space. To do this we will create a ProducerInterceptor and implement the onSend method and the onAcknowledge method. The records get written to the disk compressed and sent to the consumer compressed. is that there are several algorithms available to compress our beloved Kafka It waits for the messages that are being produced to Kafka. The close method with no parameters is equivalent to close(Long.MAX_VALUE, TimeUnit.MILLISECONDS). If the producer gets records whose size is batch.size or more for a brokers leader partitions, then it is sent right away. The Snappy compression 64K/50ms should have the highest record-send-rate and 12 the queue time. This setting forces the Producer to wait up to linger.ms before sending contents of buffer or until batch fills up whichever comes first. How many servers are likely running out of the three? You have to decide if out of order message delivery matters for your application. In the last two tutorial, we created simple Java example that creates a Kafka producer and a consumer. The callback gets invoked when the broker has acknowledged the send. It calls producer.metrics(). It sets the request timeout to 15 seconds and the retry back-off to 1 second. The default partitioner partitions using the hash of the record key if the record has a key. Then run the StockPriceKafkaProducer and look for log message from ProducerInterceptor in output. Only one server is running.  Run it as before. Run StockPriceKafkaProducer (ensure acks are set to all first). The StockSender createRandomRecord method uses randomIntBetween. Once all brokers are running, run create-topic.sh as follows.

Run it as before. Run StockPriceKafkaProducer (ensure acks are set to all first). The StockSender createRandomRecord method uses randomIntBetween. Once all brokers are running, run create-topic.sh as follows.

You should see log messages from When might you use an producer interceptor? Yes. instances are bytes. This setting allows the Producer to send requests containing multiple batches. The 'gzip' has the maximum compression ratio. according to the value passed to compression.type. A producer interceptor intercepts records a producer sends to broker and intercepts after acks are sent. Only the partition leader accepts send requests from a Producer. No. How can you access Metrics for the Kafka Producer? Now lets enable batching in the StockPriceKafkaProducer by setting batch size to 16K. Something worth mentioning is that different kinds of data respond differently Then take another broker away. computers are very helpful while doing calculations, it is also true that Broker 0 and Broker 1. You implemented batching and compression and used metrics to see how it was or was not working. The main method of StockPriceKafkaProducer increases thread pool size by 1 to fit metrics reporting. The run method drains Kafka topic. need to specify the compression in Kafka Producer, Consumer will decompress Pass config property to importantStocks. It will reduce the bandwidth that will make users increase the net messages which are sent to the broker. TimeoutException - If the time taken for fetching metadata or allocating memory exceeds max.block.ms, or getting acks from Broker exceed timeout.ms, etc. The consumers commit some CPU cycles for decompression. Kafka Consulting, Cassandra Consulting, In this tutorial, you are going to create advanced Kafka Producers. data for more efficient storage. If you start getting request timeouts and you increase the request.timeout.ms why might this not be a good idea? The type can be 'gzip', 'snappy', 'lz4', or 'none'(default). Please mail your requirement at [emailprotected] Duration: 1 week to 2 week. We will run the StockPriceKafkaProducer. Durability is a tradeoff between throughput and consistency.

The above code imports Kafka classes and sets up the logger and calls createProducer to create a KafkaProducer. Kubernetes Security Training, You can write your own. The importantStock are the ones that go into priority queue. There are two forms of send method. Serializers work for keys and values, and you set them up with the Kafka Producer properties value.serializer, and key.serializer. Make this change in all of the configuration files for the brokers under the config directory of the lab (config/server-0.properties, config/server-1.properties, and config/server-2.properties). In turn, the consumers will read the data based on the decisions made by Lets start by disabling batching in the StockPriceKafkaProducer. Note that the Messages are rejected since there are fewer in-sync replicas than required. The important stocks are IBM and UBER in this example and are the only ones that will go into the last partition. StockPrice - holds a stock price has a name, dollar, and cents, StockPriceKafkaProducer - Configures and creates KafkaProducer, StockAppConstants - holds topic and broker list, StockPriceSerializer - can serialize a StockPrice into byte[], StockSender - generates somewhat random stock prices for a given StockPrice name, Runnable, 1 thread per StockSender and shows using KafkaProducer from many threads. If you set retry > 0, then you should also set max.in.flight.requests.per.connection to 1, or there is the possibility that a re-tried message could be delivered out of order.

Observe the changes to ISRs and partition to broker ownership. Once that is running, you will need to run create-topic.sh. Such type of batch is known as a Producer Batch. partitioner that we defined to send importantStocks to a special partition. At least three in-sync replicas must respond before send is considered successful. You will disable batching and observer metrics, then you will As we have seen that the producer sends data to the Kafka in the text format, commonly called the JSON format. Call metrics() on the producer. It is a measure of broker to broker latency of the request. The acks config setting is the write-acknowledgment received count required from partition leader before the producer write request is deemed complete. This will force at least three in-sync replicas (ISRs) have to respond for our producer to get an ack from the Kafka Broker.

This denotes the total memory (in bytes) that the producer can use to buffer records to be sent to the broker. In this lab we will configure producer durability. disk (does anybody out there still use a floppy reader?) The interceptors implement the ProducerInterceptor interface.

There is the send method with a callback and one without a callback. This is a timesaver! We provide onsite Go Lang training which is instructor led. When might you use an producer interceptor? Why or Why not? Also use replica verification to see when the broker catches up. Which broker is the leader of partition 1? Lastly you will enable compression, and then observe results. We hope you enjoyed this article. Modify bin/create-topic.sh and add add config min.insync.replicas=2 A producer is a type of Kafka client that publishes records to Kafka cluster. The MetricPair is helper class that has a Metric and a MetricName. The producer must be closed to not leak resources, i.e., connections, thread pools, buffers. You can set this so that the Producer will wait this long before sending if batch size not exceeded. The StockPriceKafkaProducer main method creates a Kafka producer, then creates StockSender list passing each instance the producer. IMPORTANT STOCK: If stockName is in the importantStocks HashSet then put it in partitionNum = (partitionCount -1) (last partition).

We created a MetricsProducerReporter which periodically prints out metrics for a Producer. The StockSender Delays random time between delayMin and delayMax, then sends a random StockPrice between stockPriceHigh and stockPriceLow. The acks=all or acks=-1 is all acknowledgment which means the leader gets write confirmation from the full set of ISRs before sending an ack back to the producer. The above sets the partitioner.class to StockPricePartitioner which is a custom From the terminal kill one of the Kafka Brokers. to software that relies on a dictionary with the goal of using a fraction of Why should you set max.in.flight.requests.per.connection if you use a retry count greater than 0? The smaller the batch size the less the throughput and performance. You setup max inflight messages and retry back off.

The Producer config property partitioner.class sets the partitioner. You can use batching to reduce the amount of IO. If youre a fan of reading our blog posts, you might have observed that a lot This tutorial covers advanced producer topics like custom serializers, ProducerInterceptors, custom Partitioners, timeout, record batching & linger, and compression. Now lets enable linger in the StockPriceKafkaProducer by setting the linger 10 ms. Next we startup ZooKeeper if needed, and start or restart Kafka brokers as before. USA The objectives of this lab is to setup Kafka producer metrics and use the replication verification command line tool. JavaTpoint offers college campus training on Core Java, Advance Java, .Net, Android, Hadoop, PHP, Web Technology and Python. After you do this rerun StockPriceKafkaProducer and check Consumer stats and Producer stats. larger batches. The Partitioner interface is used Stop a server while producer is running. The new MetricsProducerReporter is passed the producer from createProducer. The StockProducerInterceptor overrides the onSend method and increments onSendCount.

Setting the Producer config ProducerConfig.ACKS_CONFIG (acks config for producer) to 1. How can LINGER_MS_CONFIG to increase throughput? Since the Producer can run with 1 down broker, notice that the replication lag can get really far behind. After you are done, restart Zookeeper if needed and then restart the servers. Serializers work for keys and values, and you set them up with the Kafka Producer properties value.serializer, and key.serializer. Mail us on [emailprotected], to get more information about given services. If not already, startup ZooKeeper as before and then startup the three Kafka brokers using scripts described earlier. Most use cases will use acks=all and set a min.insync.replicas > 1. We will configure our Producer config and set the config property: interceptor.classes to our ProducerInterceptor which we will define shortly. Every 100 records StockSender displayRecordMetaData method gets called, which prints out record info, and recordMetadata info: The Producer blocks up to max.block.ms if buffer.memory is exceeded. | The Producer will not work if two brokers go down because min.insync.replicas=2, thus two replicas have to be up besides leader. The above sets the interceptor.classes to StockProducerInterceptor which is an example Apache Kafka and the Apache Kafka Logo are trademarks of the Apache Software Foundation. to take into consideration when planning the architecture of your pipeline, The above sets the Producer config ProducerConfig.LINGER_MS_CONFIG (linger.ms) config property to to 100 ms, based on the hash of the record key. The StockPriceSerializer will serialize StockPrice into bytes. article about batch.size and linger.ms, Would the producer still run with acks=all? To do this we need to configure new Partitioner in Producer config with property ProducerConfig.INTERCEPTOR_CLASSES_CONFIG, When might you use a producer partitioner? From the terminal kill one of the Kafka Brokers. If a record is larger than the batch size, it will not be batched. StockPriceKafkaProducer import classes and sets up a logger. Record loss is rare but possible, and you might only see this used if a rarely missed record is not statistically significant, log aggregation, a collection of data for machine learning or dashboards, etc.

Run it as before. Run StockPriceKafkaProducer (ensure acks are set to all first). The StockSender createRandomRecord method uses randomIntBetween. Once all brokers are running, run create-topic.sh as follows.

Run it as before. Run StockPriceKafkaProducer (ensure acks are set to all first). The StockSender createRandomRecord method uses randomIntBetween. Once all brokers are running, run create-topic.sh as follows. You should see log messages from When might you use an producer interceptor? Yes. instances are bytes. This setting allows the Producer to send requests containing multiple batches. The 'gzip' has the maximum compression ratio. according to the value passed to compression.type. A producer interceptor intercepts records a producer sends to broker and intercepts after acks are sent. Only the partition leader accepts send requests from a Producer. No. How can you access Metrics for the Kafka Producer? Now lets enable batching in the StockPriceKafkaProducer by setting batch size to 16K. Something worth mentioning is that different kinds of data respond differently Then take another broker away. computers are very helpful while doing calculations, it is also true that Broker 0 and Broker 1. You implemented batching and compression and used metrics to see how it was or was not working. The main method of StockPriceKafkaProducer increases thread pool size by 1 to fit metrics reporting. The run method drains Kafka topic. need to specify the compression in Kafka Producer, Consumer will decompress Pass config property to importantStocks. It will reduce the bandwidth that will make users increase the net messages which are sent to the broker. TimeoutException - If the time taken for fetching metadata or allocating memory exceeds max.block.ms, or getting acks from Broker exceed timeout.ms, etc. The consumers commit some CPU cycles for decompression. Kafka Consulting, Cassandra Consulting, In this tutorial, you are going to create advanced Kafka Producers. data for more efficient storage. If you start getting request timeouts and you increase the request.timeout.ms why might this not be a good idea? The type can be 'gzip', 'snappy', 'lz4', or 'none'(default). Please mail your requirement at [emailprotected] Duration: 1 week to 2 week. We will run the StockPriceKafkaProducer. Durability is a tradeoff between throughput and consistency.

The above code imports Kafka classes and sets up the logger and calls createProducer to create a KafkaProducer. Kubernetes Security Training, You can write your own. The importantStock are the ones that go into priority queue. There are two forms of send method. Serializers work for keys and values, and you set them up with the Kafka Producer properties value.serializer, and key.serializer. Make this change in all of the configuration files for the brokers under the config directory of the lab (config/server-0.properties, config/server-1.properties, and config/server-2.properties). In turn, the consumers will read the data based on the decisions made by Lets start by disabling batching in the StockPriceKafkaProducer. Note that the Messages are rejected since there are fewer in-sync replicas than required. The important stocks are IBM and UBER in this example and are the only ones that will go into the last partition. StockPrice - holds a stock price has a name, dollar, and cents, StockPriceKafkaProducer - Configures and creates KafkaProducer, StockAppConstants - holds topic and broker list, StockPriceSerializer - can serialize a StockPrice into byte[], StockSender - generates somewhat random stock prices for a given StockPrice name, Runnable, 1 thread per StockSender and shows using KafkaProducer from many threads. If you set retry > 0, then you should also set max.in.flight.requests.per.connection to 1, or there is the possibility that a re-tried message could be delivered out of order.

Observe the changes to ISRs and partition to broker ownership. Once that is running, you will need to run create-topic.sh. Such type of batch is known as a Producer Batch. partitioner that we defined to send importantStocks to a special partition. At least three in-sync replicas must respond before send is considered successful. You will disable batching and observer metrics, then you will As we have seen that the producer sends data to the Kafka in the text format, commonly called the JSON format. Call metrics() on the producer. It is a measure of broker to broker latency of the request. The acks config setting is the write-acknowledgment received count required from partition leader before the producer write request is deemed complete. This will force at least three in-sync replicas (ISRs) have to respond for our producer to get an ack from the Kafka Broker.

This denotes the total memory (in bytes) that the producer can use to buffer records to be sent to the broker. In this lab we will configure producer durability. disk (does anybody out there still use a floppy reader?) The interceptors implement the ProducerInterceptor interface.

There is the send method with a callback and one without a callback. This is a timesaver! We provide onsite Go Lang training which is instructor led. When might you use an producer interceptor? Why or Why not? Also use replica verification to see when the broker catches up. Which broker is the leader of partition 1? Lastly you will enable compression, and then observe results. We hope you enjoyed this article. Modify bin/create-topic.sh and add add config min.insync.replicas=2 A producer is a type of Kafka client that publishes records to Kafka cluster. The MetricPair is helper class that has a Metric and a MetricName. The producer must be closed to not leak resources, i.e., connections, thread pools, buffers. You can set this so that the Producer will wait this long before sending if batch size not exceeded. The StockPriceKafkaProducer main method creates a Kafka producer, then creates StockSender list passing each instance the producer. IMPORTANT STOCK: If stockName is in the importantStocks HashSet then put it in partitionNum = (partitionCount -1) (last partition).

We created a MetricsProducerReporter which periodically prints out metrics for a Producer. The StockSender Delays random time between delayMin and delayMax, then sends a random StockPrice between stockPriceHigh and stockPriceLow. The acks=all or acks=-1 is all acknowledgment which means the leader gets write confirmation from the full set of ISRs before sending an ack back to the producer. The above sets the partitioner.class to StockPricePartitioner which is a custom From the terminal kill one of the Kafka Brokers. to software that relies on a dictionary with the goal of using a fraction of Why should you set max.in.flight.requests.per.connection if you use a retry count greater than 0? The smaller the batch size the less the throughput and performance. You setup max inflight messages and retry back off.

The Producer config property partitioner.class sets the partitioner. You can use batching to reduce the amount of IO. If youre a fan of reading our blog posts, you might have observed that a lot This tutorial covers advanced producer topics like custom serializers, ProducerInterceptors, custom Partitioners, timeout, record batching & linger, and compression. Now lets enable linger in the StockPriceKafkaProducer by setting the linger 10 ms. Next we startup ZooKeeper if needed, and start or restart Kafka brokers as before. USA The objectives of this lab is to setup Kafka producer metrics and use the replication verification command line tool. JavaTpoint offers college campus training on Core Java, Advance Java, .Net, Android, Hadoop, PHP, Web Technology and Python. After you do this rerun StockPriceKafkaProducer and check Consumer stats and Producer stats. larger batches. The Partitioner interface is used Stop a server while producer is running. The new MetricsProducerReporter is passed the producer from createProducer. The StockProducerInterceptor overrides the onSend method and increments onSendCount.

Setting the Producer config ProducerConfig.ACKS_CONFIG (acks config for producer) to 1. How can LINGER_MS_CONFIG to increase throughput? Since the Producer can run with 1 down broker, notice that the replication lag can get really far behind. After you are done, restart Zookeeper if needed and then restart the servers. Serializers work for keys and values, and you set them up with the Kafka Producer properties value.serializer, and key.serializer. Mail us on [emailprotected], to get more information about given services. If not already, startup ZooKeeper as before and then startup the three Kafka brokers using scripts described earlier. Most use cases will use acks=all and set a min.insync.replicas > 1. We will configure our Producer config and set the config property: interceptor.classes to our ProducerInterceptor which we will define shortly. Every 100 records StockSender displayRecordMetaData method gets called, which prints out record info, and recordMetadata info: The Producer blocks up to max.block.ms if buffer.memory is exceeded. | The Producer will not work if two brokers go down because min.insync.replicas=2, thus two replicas have to be up besides leader. The above sets the interceptor.classes to StockProducerInterceptor which is an example Apache Kafka and the Apache Kafka Logo are trademarks of the Apache Software Foundation. to take into consideration when planning the architecture of your pipeline, The above sets the Producer config ProducerConfig.LINGER_MS_CONFIG (linger.ms) config property to to 100 ms, based on the hash of the record key. The StockPriceSerializer will serialize StockPrice into bytes. article about batch.size and linger.ms, Would the producer still run with acks=all? To do this we need to configure new Partitioner in Producer config with property ProducerConfig.INTERCEPTOR_CLASSES_CONFIG, When might you use a producer partitioner? From the terminal kill one of the Kafka Brokers. If a record is larger than the batch size, it will not be batched. StockPriceKafkaProducer import classes and sets up a logger. Record loss is rare but possible, and you might only see this used if a rarely missed record is not statistically significant, log aggregation, a collection of data for machine learning or dashboards, etc.