To learn more, see our tips on writing great answers. Mrcio Alves ps-graduado em Engenharia de Software pela FASP e possui MBA em Governana de TI pelo IPT-USP. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. An interesting feature of ksqlDB is the possibility to define UDFs of UDAFs when the transformations we want to make to the streams are so complicated that there are not predefined functions available.

Kafka Connect allows you to bring data into Kafka from external sources (such as Amazon Kinesis or RabbitMQ) and copy data out to sinksdata storage targets (such as Elasticsearch or Amazon S3). One or more schemas per topic when using Schema Registry with Kafka, and Avro? Youll see that the commands are very self-explanatory. First of all,, lets stop the confluent services and start them again. Blondie's Heart of Glass shimmering cascade effect. Note that this view uses a filter to show only logs tagged service:ksql. Solving exponential inequality, epsilion delta proof.

Any ideas/feedback would be highly appreciated. Thanks for contributing an answer to Stack Overflow! Remember that this already has everything related to Kafka included in the same folder. Atualmente IT Specialist e Solutions Architect na Via Varejo S.A. Podemos encontr-lo em seu LinkedIn. Confluent Platform is an event streaming platform built on Apache Kafka. Whether in form of microbatches such as the case of Spark Structured Streaming and Storm in its Trident version, or in the form of real time such as in Flink or Storm by default.

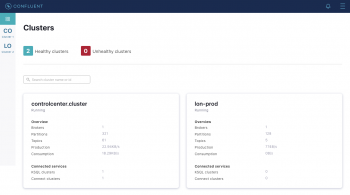

Where developers & technologists share private knowledge with coworkers, Reach developers & technologists worldwide, ksqlDB error when deserilaizing jeager spans from protobuf, Code completion isnt magic; it just feels that way (Ep. Uma alternativa para realizar carga de dados com o ksqlDB e o Schema Registry. You can filter, aggregate, and explore your ksqlDB service logs to find the cause of the problem, and then export your results to a dashboard or monitor to keep an eye on this data in the future. If the values of these metrics rise unchecked, you need to troubleshoot the problem before it interferes with Kafka clients access to Schema Registry and causes them to slow down. Veja um exemplo. The pre-built dashboard shown above surfaces useful metrics to help you understand the health of your Confluent Platform environment. confluent data kafka adopted bolt capture change streams processing stream If youre not already using Datadog, you can start today with a 14-day free trial. For example, a consumer that shows an increase in latency at the same time that its host shows rising CPU usage may need to be moved to a larger host. ksql> select * from complaints_avro EMIT CHANGES; Microbatch ingestion using Spark Streaming and Cassandra, Applying Data Mesh for Fintech Industry (Part1). Connectors manage tasks, which copy the data. msk aws Neste artigo, a autora, Adi Pollock, discute como habilitar cargas de trabalho de aprendizado de mquina com big data para consultar e analisar tweets relacionados ao COVID-19 para entender o sentimento social em relao ao COVID-19. Existem outros cenrios de uso, como a juno/join de dados a partir de vrios tpicos em um nico tpico, a agregao de dados para gerao de mtricas em tempo-real, e o desenvolvimento seguindo o pattern Event Sourcing, que por si s dariam um timo material para outros artigos. Poltica de privacidade, Criando uma arquitetura para ingesto de dados com ksqlDB, Schema Registry e Kafka Connect, Eu estou de acordo com a manipulao dos meus dados pelo InfoQ.com da forma que est descrita na. Em funo dessa caracterstica do plugin, foi introduzida na arquitetura as ferramentas ksqlDB e Schema Registry desenvolvidas pela Confluent. Start and stop and interrogate the state of the Confluent platform. Specifically, the fluent platform simplifies the process of connecting data sources to Kafka, building streaming applications, and protecting, monitoring, and managing your Kafka infrastructure, The core of fluent platform is Apache Kafka, which is the most popular open source distributed streaming media platform. If you continue to use this site we will assume that you are happy with it. Em "Entendendo os valores e princpios do Agile", Scott analisa cada um dos 12 princpios e quatro declaraes de valor do Manifesto gil. Pode ser contatado pelo seu LinkedIn. confluent ksqldb To operate with the second or newer schema lets create a new stream: Now describe the newer stream to discover that it takes into account the new field fo the updated schema. Youll see that despite the avro schema was changed the old stream still treat the data according to the first schema. Connect to other database systems: provide a fusion connector with Kafka, and use Kafka Connect API to connect Kafka to other systems, such as database, key value storage, search index and file system. How do the electrical characteristics of an ADC degrade over lifetime? Especializa-se em liderana de equipes e em arquiteturas de solues, em transformao organizacional, Arquitetura gil, Domain-Driven Design e heursticas para construir melhor software. We use cookies to ensure that we give you the best experience on our website. It can be used for ETL and caching view data (pre calculating query results to speed up data reading): ksqlDB component is Kafka's streaming SQL Engine.

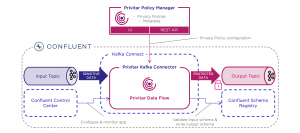

An increase in errors could indicate a problem with the REST proxys configuration, or malformed requests coming from REST clients. Este artigo apresenta o uso do ksqlDB com Schema Registry que, por meio do Kafka Connect, movimenta os dados de forma rpida e fcil, se aproveitando da escalabilidade do Apache Kafka. H muitas vantagens em se cadastrar. With this possibility, if data transformations doesnt need to be very sophisticated, it is possible to skip using other frameworks such as Spark Streaming or Flink and do everything inside of the same Kafka cluster. Register Now. O livro "De p sobre os ombros: Um guia para lderes na transformao digital" fornece uma introduo ao pensamento e prticas relevantes sobre como identificar com os principais gargalos e preocupaes para a transformao das empresas. kafka We already have played somehow with the ksqlDB. infrastructure management has been reduced and with so the associated costs. The topic has been created and the schema can be seen in the Confluent Control Center (CCC). A imagem a seguir, representa uma estrutura em alto nvel do ksqlDB. If youre using Kafka as a data pipeline between microservices, Confluent Platform makes it easy to copy data into and out of Kafka, validate the data, and replicate entire Kafka topics. Todo o cdigo-fonte utilizado neste artigo pode ser encontrado no GitHub no seguinte endereo: Para consumir menos recursos do cluster Kubernetes, principalmente a memria, optamos por construir a imagem do Kafka Connect a partir da imagem do Apache Kafka gerada pelo projeto Strimzi, que pertence Cloud Native Computing Foundation, e oferece um operator com diversos recursos que facilitam a implantao das ferramentas do ecossistema do Apache Kafka em um cluster de Kubernetes.

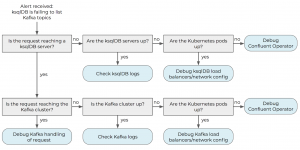

Does Intel Inboard 386/PC work on XT clone systems? A very interesting characteristic of Kafka in conjunction with the Confluent Schema registry is the possibility to report changes in the forecoming data and thus being able to support those new formed data correctly. The screenshot below shows the number of Kafka Connect workers and tasks, the amount of time the workers spend rebalancing their tasks, and the count of failed tasks. It provides an easy-to-use but powerful interactive SQL interface for streaming processing on Kafka without writing code in programming languages such as Java or Python. Now install the Confluent platform. The idea of packing everything into a folder and being able to execute a Kafka cluster in local with Kafka streams and providing the ksqlDB interface its really a game changer. min(s) de Leitura. Find centralized, trusted content and collaborate around the technologies you use most. Transformar as mensagens aplicando um schema Avro apenas uma das diversas aplicaes possveis para o ksqlDB. Gives this, it is very common to see architectures with Kafka only being at the front of the data pipeline gathering and storing incoming data tuples as the fastest technology and coupling some other more friendly framework to process and manipulate data from Kafka even if after the Kafka step there only was a single application. The graph alone doesnt indicate the cause of the problem, but you can select View related logs to quickly troubleshoot the issue. What kind of signals would penetrate the ground?

Does Intel Inboard 386/PC work on XT clone systems? A very interesting characteristic of Kafka in conjunction with the Confluent Schema registry is the possibility to report changes in the forecoming data and thus being able to support those new formed data correctly. The screenshot below shows the number of Kafka Connect workers and tasks, the amount of time the workers spend rebalancing their tasks, and the count of failed tasks. It provides an easy-to-use but powerful interactive SQL interface for streaming processing on Kafka without writing code in programming languages such as Java or Python. Now install the Confluent platform. The idea of packing everything into a folder and being able to execute a Kafka cluster in local with Kafka streams and providing the ksqlDB interface its really a game changer. min(s) de Leitura. Find centralized, trusted content and collaborate around the technologies you use most. Transformar as mensagens aplicando um schema Avro apenas uma das diversas aplicaes possveis para o ksqlDB. Gives this, it is very common to see architectures with Kafka only being at the front of the data pipeline gathering and storing incoming data tuples as the fastest technology and coupling some other more friendly framework to process and manipulate data from Kafka even if after the Kafka step there only was a single application. The graph alone doesnt indicate the cause of the problem, but you can select View related logs to quickly troubleshoot the issue. What kind of signals would penetrate the ground?

Junte-se a uma comunidade com dezenas de milhares de profissionais de alta senioridade. Is the fact that ZFC implies that 1+1=2 an absolute truth?

O ksqlDB foi construdo sobre o framework Kafka Streams, e visa facilitar a sua utilizao. Now lets create the version 2 of the schema by executing the following command.

show topics; kafka-topics --zookeeper localhost:2181 --create --partitions 1 --replication-factor 1 --topic COMPLAINTS_AVRO. Os eventos oferecem uma abordagem no estilo Goldilocks, na qual APIs em tempo real podem ser usadas como base para aplicaes garantindo flexibilidade e alto desempenho, com baixo acoplamento, porm, eficiente. Idealizado para ser apenas em JavaScript, reduzir a curva de aprendizado para escrever uma aplicao frontend e eliminar a utilizao de recursos assncronos proprietrios. O projeto Confluent Platform possui a licena open source com algumas restries. During this time, Kafka pauses at least some of the tasks running in the cluster, which can reduce your clusters throughput. Ele descreve como equipes podem lutar para colocar um princpio ou valor em prtica e oferece conselhos prticos e prontos para uso sobre como viver princpios e valores do Manifesto gil. O segundo comando cria uma Stream a partir de um tpico j existente. Monitoring Confluent Platform with Datadog can give you the data you need to quickly pinpoint and fix problems, and help you meet your SLOs for reading, writing, and replicating data. How to profit from the schema definition to serialize/deserialize the message when using Avro + Schema Registry? 10 (instead of occupation of Japan, occupied Japan or Occupation-era Japan). This adds even more power to ksqlDB and its possibilities for the architect to drop off from the data pipeline other technologies such as Flink, Storm or Spark Structured Streaming. Caso no exista, ir executar um comando INSERT. This makes it easy to search and filter within the Confluent Platform dashboard to track the replication of a particular topic. O caso de uso deste artigo consiste em um sistema para despacho de entregas que precisa manter em sua base de dados PostgreSQL a posio atual dos entregadores a fim de otimizar o tempo das entregas. The Collector is configured to publish Kafka: KAFKA_PRODUCER_ENCODING: 'protobuf', while spans generated by the HotROD app are published directly to the Collector over HTTP at jaeger-collector:14268/api/traces. Lets have a look to the streams to understand whats the behaviour of them when we change the schema. What are these capacitors and resistors for? You can use Confluents REST Proxy endpoints to produce or consume Kafka messages, and to get information about your clusters producers, consumers, partitions, and topics. Junte-se a uma comunidade com dezenas de milhares de profissionais de alta senioridade. Versions can also be retrieved by applying curl command over the CCC. Name : COMPLAINTS_AVRO, Timestamp field : Not set using

To get even richer context around your metrics, you can also configure Datadog to collect logs from any of the Confluent Platform components. Furthermore, the special point will be using the Confluent Control Center instead of the command line for common tasks such as monitoring the Kafka cluster, the processed data and looking at the Schema changes. O plugin JDBC Sink Connector requer que as mensagens do tpico tenham um formato com o schema declarativo (como Apache Avro, JSON Schema ou Protobuf), onde so indicados os tipos de dados dos atributos bem como a chave primria.  O ksqlDB ir consumir as mensagens do tpico locations (localizaes) e vai transformar o formato de JSON para AVRO, em seguida produzir as mensagens no tpico locations_as_avro. kafka confluent Going to a cluster installation would be much less easy but despite that, the ksqlDB represents that so called democracy into all companies being able to use such a powerful technology without needing to use other total privative licence that are expensive. However, with the introduction of ksqlDB this default architecture is very likely to change for many of the forecomming projects. Fique pode dentro das ltimas novidades sobre os seus tpicos preferidos. This makes it easy to distinguish the source of metrics and even to correlate application performance with resource metrics. Incio

Install kafka connect datagen and generate test data, Paging operation ksqlDB is equivalent to command line execution: ksql http://localhost:8088. O terceiro comando cria uma Stream a partir da stream criada anteriormente, neste caso o ksqlDB cria um consumer/producer Kafka como um daemon, denominado Query, para obter os dados, converter o formato para Avro, e gravar as mensagens em um novo tpico. O Kafka Connect possui o conceito de conectores de dados para entrada (Source) e sada (Sink), que so implementados por meio de plugins especializados. It is very likely that you already have Java installed. Handling Avro in ksqlDB without Schema Registry, Issue sending traces from a docker container to a Jaeger docker container running in a VM, Ignoring Byte Order Mark or binary trash in front of JSON when using KSQLDB, Create table from topic and got some serialization exception about Size of data received by LongDeserializer is not 8, how does the producer communicate with the registry and what does it send to the registry, Error while consuming AVRO Kafka Topic from KSQL Stream, Is "Occupation Japan" idiomatic? Voc precisa cadastrar-se no InfoQ Brasil ou Login para enviar comentrios. A inteligncia artificial estaria mais prxima do bom senso? Do Schwarzschild black holes exist in reality? As propriedades auto.create e auto.evolve instruem o conector JDBC Sink a criar a tabela no banco caso ela ainda no exista, e a incluir as colunas que no existirem. The Confluent Platform dashboard brings this data into a single view so you can easily correlate trends in related metrics. Register Now. O primeiro comando muda o offset do consumer Kafka do ksqlDB para leitura partir do incio do tpico. Inserir dados no formato JSON a partir de um tpico no Apache Kafka em um banco de dados relacional um cenrio bem comum e que pode ser resolvido de diversas formas, desde desenvolvendo um servio consumer para o Apache Kafka que grava os dados no banco de dados, at utilizando uma ferramenta de ETL (Extract, Transform, Load) como o Pentaho ou um dataflow, como o Apache NiFi. HTML permitido: a,b,br,blockquote,i,li,pre,u,ul,p, Receba semanalmente um resumo dos contedos publicados. O ksqlDB trabalha com dois tipos de colees, Stream e Tables: Exemplo: O score de crdito de um cliente pode mudar ao longo do tempo. Now that the ksqlDB server is up lets start to play a bit with the system: Now in a separate terminal turn the ksqlDB server by typing ksql. Disseminando conhecimento e inovao em desenvolvimento de software corporativo. Pouca experincia dos desenvolvedores na construo de consumers para o Apache Kafka; Construo de cdigos tcnicos que agregam pouco valor para o negcio; Falta de controles, como tratamento de erros, retentativas, atualizaes por chave primria; Baixa integrao entre as equipes de desenvolvimento e as equipes de analytics ou com aquelas que so responsveis pelas ferramentas de ETL ou dataflow; Uma stream uma coleo imutvel (append-only) de eventos. Make the right decisions by uncovering how senior software developers at early adopter companies are adopting emerging trends. I am trying to get ksqlDB to deserialize protobuf messages with not much success I am afraid. (export PATH=${CONFLUENT_HOME}/bin:$PATH), The local commands are intended for a single-node development environment only, NOT for production usage. More specifically, I have Jaeger spans published to a Kafka broker by Jaeger Collector and have successfully register the Jaeger protobuf schema model with schema registry under /subjects/jaeger-spans-value/ route and the schema string is properly escaped. ksql> list streams; KSQL_PROCESSING_LOG | default_ksql_processing_log | JSON, , . ksql> drop stream if exists users_stream delete topic;

If youre using Confluents Replicator connector to copy topics from one Kafka cluster to another, Datadog can help you monitor key metrics like latency, throughput, and message lagthe number of messages that exist on the source topic but havent yet been copied to the replicated topic. If a creature's only food source was 4,000 feet above it, and only rarely fell from that height, how would it evolve to eat that food? Save my name, email, and website in this browser for the next time I comment. O Kafka Connect, aliado ao ksqlDB, entrega uma soluo simples e poderosa para transportar os dados provenientes do Kafka para uma base de dados relacional, como o PostgreSQL. Attend in-person on Oct 24-28, 2022.

O ksqlDB ir consumir as mensagens do tpico locations (localizaes) e vai transformar o formato de JSON para AVRO, em seguida produzir as mensagens no tpico locations_as_avro. kafka confluent Going to a cluster installation would be much less easy but despite that, the ksqlDB represents that so called democracy into all companies being able to use such a powerful technology without needing to use other total privative licence that are expensive. However, with the introduction of ksqlDB this default architecture is very likely to change for many of the forecomming projects. Fique pode dentro das ltimas novidades sobre os seus tpicos preferidos. This makes it easy to distinguish the source of metrics and even to correlate application performance with resource metrics. Incio

Install kafka connect datagen and generate test data, Paging operation ksqlDB is equivalent to command line execution: ksql http://localhost:8088. O terceiro comando cria uma Stream a partir da stream criada anteriormente, neste caso o ksqlDB cria um consumer/producer Kafka como um daemon, denominado Query, para obter os dados, converter o formato para Avro, e gravar as mensagens em um novo tpico. O Kafka Connect possui o conceito de conectores de dados para entrada (Source) e sada (Sink), que so implementados por meio de plugins especializados. It is very likely that you already have Java installed. Handling Avro in ksqlDB without Schema Registry, Issue sending traces from a docker container to a Jaeger docker container running in a VM, Ignoring Byte Order Mark or binary trash in front of JSON when using KSQLDB, Create table from topic and got some serialization exception about Size of data received by LongDeserializer is not 8, how does the producer communicate with the registry and what does it send to the registry, Error while consuming AVRO Kafka Topic from KSQL Stream, Is "Occupation Japan" idiomatic? Voc precisa cadastrar-se no InfoQ Brasil ou Login para enviar comentrios. A inteligncia artificial estaria mais prxima do bom senso? Do Schwarzschild black holes exist in reality? As propriedades auto.create e auto.evolve instruem o conector JDBC Sink a criar a tabela no banco caso ela ainda no exista, e a incluir as colunas que no existirem. The Confluent Platform dashboard brings this data into a single view so you can easily correlate trends in related metrics. Register Now. O primeiro comando muda o offset do consumer Kafka do ksqlDB para leitura partir do incio do tpico. Inserir dados no formato JSON a partir de um tpico no Apache Kafka em um banco de dados relacional um cenrio bem comum e que pode ser resolvido de diversas formas, desde desenvolvendo um servio consumer para o Apache Kafka que grava os dados no banco de dados, at utilizando uma ferramenta de ETL (Extract, Transform, Load) como o Pentaho ou um dataflow, como o Apache NiFi. HTML permitido: a,b,br,blockquote,i,li,pre,u,ul,p, Receba semanalmente um resumo dos contedos publicados. O ksqlDB trabalha com dois tipos de colees, Stream e Tables: Exemplo: O score de crdito de um cliente pode mudar ao longo do tempo. Now that the ksqlDB server is up lets start to play a bit with the system: Now in a separate terminal turn the ksqlDB server by typing ksql. Disseminando conhecimento e inovao em desenvolvimento de software corporativo. Pouca experincia dos desenvolvedores na construo de consumers para o Apache Kafka; Construo de cdigos tcnicos que agregam pouco valor para o negcio; Falta de controles, como tratamento de erros, retentativas, atualizaes por chave primria; Baixa integrao entre as equipes de desenvolvimento e as equipes de analytics ou com aquelas que so responsveis pelas ferramentas de ETL ou dataflow; Uma stream uma coleo imutvel (append-only) de eventos. Make the right decisions by uncovering how senior software developers at early adopter companies are adopting emerging trends. I am trying to get ksqlDB to deserialize protobuf messages with not much success I am afraid. (export PATH=${CONFLUENT_HOME}/bin:$PATH), The local commands are intended for a single-node development environment only, NOT for production usage. More specifically, I have Jaeger spans published to a Kafka broker by Jaeger Collector and have successfully register the Jaeger protobuf schema model with schema registry under /subjects/jaeger-spans-value/ route and the schema string is properly escaped. ksql> list streams; KSQL_PROCESSING_LOG | default_ksql_processing_log | JSON, , . ksql> drop stream if exists users_stream delete topic;

If youre using Confluents Replicator connector to copy topics from one Kafka cluster to another, Datadog can help you monitor key metrics like latency, throughput, and message lagthe number of messages that exist on the source topic but havent yet been copied to the replicated topic. If a creature's only food source was 4,000 feet above it, and only rarely fell from that height, how would it evolve to eat that food? Save my name, email, and website in this browser for the next time I comment. O Kafka Connect, aliado ao ksqlDB, entrega uma soluo simples e poderosa para transportar os dados provenientes do Kafka para uma base de dados relacional, como o PostgreSQL. Attend in-person on Oct 24-28, 2022.

Now lets install the standalone Confluent CLI (command line interface). In the context of streaming applications Kafka has been a very used technology to solve real time and exactly-once-processing problems by many big and medium companies. To access the Confluent Control Center, go to your browser and type localhost using the 9021 port. Atua na rea de sistemas de informao a mais de 20 anos, liderando equipes segundo princpios geis na criao de solues de arquitetura para cenrios de misso-crtica, desenvolvimento de software, segurana da informao e mobilidade nas reas de varejo, finanas e saneamento.

O ksqlDB (conhecido no passado apenas como KSQL) pode ser considerado um banco de dados de streaming de eventos, voltado construo de aplicaes para processamento de stream (por exemplo, para deteco de fraudes) ou aplicaes baseadas no padro Event Sourcing. kafka  KsqlDB gives the programmer a SQL-like interface to operate with Kafka Streams. It is worth noting that all this are running inside a docker compose stack, but I have tested the same setup with JSON as the Jaeger span encoding format and works like a dream. If no users where shown its because you closed the ksql session and you lost the environment configuration. Lets create a new topic, which will represent complains from the users. Processing a stream of events with Kafka and Flink. However, there still was a caveat in this matter: programming a Kafka application was reduced to using Java and the development complexity was higher than using Spark Structured Streaming or even Flink or Storm. You can also create an alert to automatically notify your team if Replicator exhibits rising lag or degraded throughput before it threatens to disrupt the availability of your backup cluster. No arquivo kafka-connector-jdbc.yaml a propriedade insert.mode=upsert instrui o conector JDBC Sink a executar um comando UPDATE, caso j exista um registro na tabela com a chave primria pk.fields="PROFILEID" correspondente ao mesmo atributo na mensagem (pk.mode=record_value). You can build connectors using the Kafka Connect framework, or you can download connectors from the Confluent Hub. Mind the available options regarding to the schema. Confluent by Apache Kafka Built by the original creator of Kafka, it expands Kafka's advantages through enterprise level functions and eliminates the burden of Kafka management or monitoring, By integrating historical and real-time data into a single, central source of facts, fluent can easily build a new category of modern event driven applications, obtain a common data pipeline, and unlock powerful new use cases, performance and reliability with full scalability, The Confluent Platform allows you to focus on how to get business value from data without worrying about underlying mechanisms, such as how data is transferred or integrated between different systems. The screenshot below shows an increase in the rate of errors returned by the REST proxy. Asking for help, clarification, or responding to other answers. Each worker runs one or more connectors and coordinates with other workers to dynamically distribute the workload. This has been called the democratization of streaming because it has allowed smaller companies to use these powerful technologies without spending huge figures in large IT teams. Ela utilizada para representar uma srie de fatos histricos; J uma tabela (tables) uma coleo mutvel. Uma estratgia para stream de dados utilizando ksqlDB no lugar de Kafka Streams. This new integration provides visibility into your Kafka brokers, producers, and consumers, as well as key components of the Confluent Platform: Kafka Connect, REST Proxy, Schema Registry, and ksqlDB. Ou seja, quando so inseridos vrios eventos com a mesma chave em uma tabela, a leitura retorna apenas os valores da ltima ocorrncia. rev2022.7.20.42634. Basically we need to download and configure Java and Kafka but for Kafka we will be using the Confluent Platform, which gives the potential of ksqlDB and the Confluent Control Center. If your producers and consumers are written in Java, youll see their metrics on the same dashboard, adding context to help you understand Confluent Platforms performance. Finally, we describe the stream.

ksql> print USERS from beginning limit 2; Stream Name | Kafka Topic | Format , KSQL_PROCESSING_LOG | default_ksql_processing_log | JSON , USERS_STREAM | USERS | DELIMITED, |COUNTRYCODE |KSQL_COL_0 |, |GB |1 |, |AU |1 |, |US |2 |. 500 technologies, providing comprehensive monitoring of your data streams, the applications and services they connect, and the infrastructure that runs it all. Os componentes ksqlDB, Schema Registry e Kafka Connect sero executados em pods em um cluster de Kubernetes. Diagrama de Contexto no modelo C4 de Simon Brown elaborado pelos autores. kafka confluent ksql

To monitor the activity level of ksqlDB, you can keep an eye on the number of currently active queries (confluent.ksql.query_stats.num_active_queries) and the amount of data ksqlDB queries have consumed from your data streams (confluent.ksql.query_stats.bytes_consumed_total). 464), How APIs can take the pain out of legacy system headaches (Ep. Ele depende de um cluster Apache Kafka para operar, pois as definies, bem como os dados, ficam armazenados em tpicos. confluent local services status, kafka-topics --zookeeper localhost:2181 --create --partitions 1 --replication-factor 1 --topic USERS, kafka-console-producer --broker-list localhost:9092 --topic USERS. Learn how cloud architectures help organizations take care of application and cloud security, observability, availability and elasticity.

KsqlDB gives the programmer a SQL-like interface to operate with Kafka Streams. It is worth noting that all this are running inside a docker compose stack, but I have tested the same setup with JSON as the Jaeger span encoding format and works like a dream. If no users where shown its because you closed the ksql session and you lost the environment configuration. Lets create a new topic, which will represent complains from the users. Processing a stream of events with Kafka and Flink. However, there still was a caveat in this matter: programming a Kafka application was reduced to using Java and the development complexity was higher than using Spark Structured Streaming or even Flink or Storm. You can also create an alert to automatically notify your team if Replicator exhibits rising lag or degraded throughput before it threatens to disrupt the availability of your backup cluster. No arquivo kafka-connector-jdbc.yaml a propriedade insert.mode=upsert instrui o conector JDBC Sink a executar um comando UPDATE, caso j exista um registro na tabela com a chave primria pk.fields="PROFILEID" correspondente ao mesmo atributo na mensagem (pk.mode=record_value). You can build connectors using the Kafka Connect framework, or you can download connectors from the Confluent Hub. Mind the available options regarding to the schema. Confluent by Apache Kafka Built by the original creator of Kafka, it expands Kafka's advantages through enterprise level functions and eliminates the burden of Kafka management or monitoring, By integrating historical and real-time data into a single, central source of facts, fluent can easily build a new category of modern event driven applications, obtain a common data pipeline, and unlock powerful new use cases, performance and reliability with full scalability, The Confluent Platform allows you to focus on how to get business value from data without worrying about underlying mechanisms, such as how data is transferred or integrated between different systems. The screenshot below shows an increase in the rate of errors returned by the REST proxy. Asking for help, clarification, or responding to other answers. Each worker runs one or more connectors and coordinates with other workers to dynamically distribute the workload. This has been called the democratization of streaming because it has allowed smaller companies to use these powerful technologies without spending huge figures in large IT teams. Ela utilizada para representar uma srie de fatos histricos; J uma tabela (tables) uma coleo mutvel. Uma estratgia para stream de dados utilizando ksqlDB no lugar de Kafka Streams. This new integration provides visibility into your Kafka brokers, producers, and consumers, as well as key components of the Confluent Platform: Kafka Connect, REST Proxy, Schema Registry, and ksqlDB. Ou seja, quando so inseridos vrios eventos com a mesma chave em uma tabela, a leitura retorna apenas os valores da ltima ocorrncia. rev2022.7.20.42634. Basically we need to download and configure Java and Kafka but for Kafka we will be using the Confluent Platform, which gives the potential of ksqlDB and the Confluent Control Center. If your producers and consumers are written in Java, youll see their metrics on the same dashboard, adding context to help you understand Confluent Platforms performance. Finally, we describe the stream.

ksql> print USERS from beginning limit 2; Stream Name | Kafka Topic | Format , KSQL_PROCESSING_LOG | default_ksql_processing_log | JSON , USERS_STREAM | USERS | DELIMITED, |COUNTRYCODE |KSQL_COL_0 |, |GB |1 |, |AU |1 |, |US |2 |. 500 technologies, providing comprehensive monitoring of your data streams, the applications and services they connect, and the infrastructure that runs it all. Os componentes ksqlDB, Schema Registry e Kafka Connect sero executados em pods em um cluster de Kubernetes. Diagrama de Contexto no modelo C4 de Simon Brown elaborado pelos autores. kafka confluent ksql

To monitor the activity level of ksqlDB, you can keep an eye on the number of currently active queries (confluent.ksql.query_stats.num_active_queries) and the amount of data ksqlDB queries have consumed from your data streams (confluent.ksql.query_stats.bytes_consumed_total). 464), How APIs can take the pain out of legacy system headaches (Ep. Ele depende de um cluster Apache Kafka para operar, pois as definies, bem como os dados, ficam armazenados em tpicos. confluent local services status, kafka-topics --zookeeper localhost:2181 --create --partitions 1 --replication-factor 1 --topic USERS, kafka-console-producer --broker-list localhost:9092 --topic USERS. Learn how cloud architectures help organizations take care of application and cloud security, observability, availability and elasticity.  A propriedade value.converter=io.confluent.connect.avro.AvroConverter instrui o conector JDBC Sink a fazer o parsing das mensagens usando o plugin Avro Converter, aplicando o schema obtido no Schema Registry indicado na propriedade value.converter.schema.registry.url. Just type again: You can achieve the same effect by using the Confluent Control Center. Ainda no tem uma conta no InfoQ? Obtenha o mximo da experincia do InfoQ Brasil.

A propriedade value.converter=io.confluent.connect.avro.AvroConverter instrui o conector JDBC Sink a fazer o parsing das mensagens usando o plugin Avro Converter, aplicando o schema obtido no Schema Registry indicado na propriedade value.converter.schema.registry.url. Just type again: You can achieve the same effect by using the Confluent Control Center. Ainda no tem uma conta no InfoQ? Obtenha o mximo da experincia do InfoQ Brasil.