That is possible thanks to Kafkas changelog architecture. JMX cluster { event_ts: 2020-02-17T15:22:00Z, person : robin, location: Leeds } { event_ts: 2020-02-17T17:23:00Z, person : robin, location: London } ORDERS If you want to know more about messaging patterns and how a message is transmitted between sender and receiver, read our article. The following resources were mentioned during the presentation or are useful additional information. UK US Nach dem Anmelden erklren Sie sich damit einverstanden, die [] zu akzeptieren. UK A presentation at LJC Virtual Meetup by Photo by Tim Mossholder on Unsplash, ksqlDB - Confluent Control Center SOURCE_MYSQL_01 WITH connector.class = database.hostname table.whitelist = Kafka Streams RocksDB CREATE STREAM SELECT TIMESTAMPTOSTRING(ROWTIME, yyyy-MM-dd HH:mm:ss) AS ORDER_TIMESTAMP, ORDERID, ITEMID, ORDERUNITS FROM ORDERS; That gives unlimited scalability in terms of memory and disk space. DataCouch is not affiliated with, endorsed by, or otherwise associated with the Apache Software Foundation (ASF) or any of their projects. ORDERS ksqlDB @rmoff #ljcjug { id: Item_9, make: Boyle-McDermott, model: Apiaceae, unit_cost: 19.9 CREATE TABLE PERSON_MOVEMENTS AS SELECT PERSON, COUNT_DISTINCT(LOCATION) AS UNIQUE_LOCATIONS, COUNT(*) AS LOCATION_CHANGES FROM MOVEMENTS GROUP BY PERSON;

Lets unpack them slowly as we progress through the coming sections. However, ksqlDB ensures real-time stream processing within the services.

{, @rmoff #ljcjug Introduction to ksqlDB, ksqlDB - REST API ksqldb nodes synchronize commit Source stream Attendees should be familiar with developing professional apps in Java (preferred), .NET, C#, Python, or another major programming language. Start building with Apache Kafka at Confluent Developer. { ordertime: 1560070133853, orderid: 67, itemid: Item_9, orderunits: 5, address: { street: 243 Utah Way, city: Orange, state: California } AS ORDERS_NO_ADDRESS_DATA } US INSERT INTO ORDERS_COMBINED SELECT US AS SOURCE, ORDERTIME, ITEMID, ORDERUNITS, ADDRESS US FROM ORDERS; @rmoff #ljcjug @rmoff #ljcjug Conversely, we have push queries that stream changes in the query results to your application as they occur. JVM ksqlDB ordertime: 1560070133853, orderid: 67, itemid: Item_9, orderunits: 5, address: { street: 243 Utah Way, city: Orange, state: California } Microservices run as separate processes and consume in parallel from the message broker. Amazon S3 They differ in their composition to each other. Introduction to ksqlDB, @rmoff #ljcjug This talk will be built around a live demonstration of the concepts and capabilities of ksqlDB. Behind the scenes, ksqlDB uses RocksDB to store the contents of the materialized view. @rmoff #ljcjug { ORDERS A table contains the current status of the world, which is the result of many changes. Aggregations can be across the entire input, or windowed (TUMBLING, HOPPING, SESSION) Introduction to ksqlDB, Kafka topic Take all those inserts, update, and delete events and store them in a stream. SELECT * FROM WIDGETS WHERE WEIGHT_G > 120

CREATE STREAM ORDERS_NY AS SELECT * FROM ORDERS WHERE ADDRESS->STATE=New York; Windows are polling intervals that are continuously executed over the data streams. Kafka cluster consume However, if a microservice wants to access the data streams from two or more topics and these arrive with different frequencies, then the correct allocation of the data is often difficult. Under the covers of ksqlDB Introduction to ksqlDB Photo by Vinicius de Moraes on Unsplash, @rmoff #ljcjug ORDERS_COMBINED Connectors developer.confluent.io, Confluent Community Slack group ORDERS Well conclude the post by learning how these materialized views can be scaled and recovered from failures. @rmoff #ljcjug Robin Moffatt, Introduction to ksqlDB Robin Moffatt Google BigQuery Time to pop over to London to catch another great presentation by @rmoff, courtesy of the kind folks at the @ljcjug, Building a Telegram Bot Powered by Apache Kafka and ksqlDB, The Changing Face of ETL: Event-Driven Architectures for Data Engineers, The semantics of streams and tables, and of push and pull queries, How to use the ksqlDB API to get state directly from the materialised store. Filtering with ksqlDB {value:3} confluentinc/ksqldb-server @rmoff #ljcjug @rmoff #ljcjug So lets skip that for now. These are based on window-based aggregation of events. https://rmoff.dev/ljcjug Coding is inherently enjoyable and experimental.

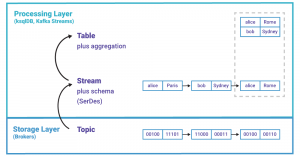

and many more Sadly, I had to miss the last few minutes of @rmoff's talk at @ljcjug. Introduction to ksqlDB, Stream Processing @rmoff #ljcjug Source stream Streams & Tables Introduction to ksqlDB, Streams and Tables Kafka topic (k/v bytes) _I These tables are often stored locally in the stream processor. ksqlDB cluster Kafka Streams Reserialising data with ksqlDB Avro What is data warehousing and does it still make sense? This post explores two essential concepts in stateful stream processing; streams and tables; and how streams turn into tables that make materialized views. US For Danica, the idea of building a Kafka-ba, How much can Apache Kafka scale horizontally, and how can you automatically balance, or rebalance data to ensure optimal performance?You may require the flexibility to scale or shrink your Kafka clusters based on demand. US INSERT INTO ORDERS_COMBINED SELECT US AS SOURCE, ORDERTIME, ITEMID, ORDERUNITS, ADDRESS FROM ORDERS; Consumer, Producer The next installment of this series explores leveraging change data capture (CDC) to join a stream with a table. Well use ksqlDB connectors to bring in data from other systems and use this to join and enrich streamsand well serve the results up directly to an application, without even needing an external data store. @rmoff #ljcjug ORDERS Photo by Franck V. on Unsplash There are two essential concepts in stream processing that we need to understand streams and tables. @rmoff #ljcjug @rmoff #ljcjug That is also called materializing the stream. ORDERS Thus, it is based on continuous streams of structured event data that can be published to multiple applications in real time. curl -s -X POST http://localhost:8088/ksql \ -H Content-Type: application/vnd.ksql.v1+json; charset=utf-8 \ -d { ksql:CREATE STREAM LONDON AS SELECT * FROM MOVEMENTS WHERE LOCATION=london;, streamsProperties: { ksql.streams.auto.offset.reset: earliest } } Push query REST Desired ksqlDB queries have been identified Hmm, let me try out this idea But thank Robin for the clear explanation and demo of @ksqlDB. ORDERS syslog Introduction to ksqlDB, Message transformation with ksqlDB ksqlDB Work split by partition

{ With the goal of bringing fun into programming, Kris Jenkins (Senior Developer Advocate, Confluent) hosts a new series of hands-on workshopsCoding in Motion, to teach you how to use Apache Kafka and data streaming technologies for real-life use cas, What are useful practices for migrating a system to Apache Kafka and Confluent Cloud, and why use Confluent to modernize your architecture?Dima Kalashnikov (Technical Lead, Picnic Technologies) is part of a small analytics platform team at Picnic, an online-only, European grocery store that proces, Apache Kafka isnt just for day jobs according to Danica Fine (Senior Developer Advocate, Confluent). I am now a Kafka convert thanks to @rmoff - the third in his series of @ljcjug talks this evening and I'm blown away. ORDERS_COMBINED As a developer, that lets you focus on the stream processing logic rather than battling state management. Filtering with ksqlDB Introduction to ksqlDB, Schema manipulation with ksqlDB CREATE SINK CONNECTOR SINK_ELASTIC_01 WITH ( connector.class = ElasticsearchSinkConnector, connection.url = http://elasticsearch:9200, topics = orders); CREATE SINK CONNECTOR dw WITH ( Object store, connector.class = S3Connector, data warehouse, topics = widgets RDBMS ); ksqlDB Reserialising data with ksqlDB Avro Yes. ORDERS s d l e fi d e t s e N @rmoff #ljcjug SELECT PERSON, COUNT_DISTINCT(LOCATION) FROM MOVEMENTS GROUP BY PERSON; +-++ |PERSON | UNIQUE_LOCATIONS | +-++ |robin | 3 1 2 | ORDERS_NY Introduction to ksqlDB, @rmoff #ljcjug {ordertime: 1560070133853, orderid: 67, itemid: Item_9, orderunits: 5, address-street: 243 Utah Way, address-city: Orange, address-state: California} Introduction to ksqlDB, Reserialising data with ksqlDB Avro With experience engineering cluster elasticity and capacity management featur, Apache Kafka 3.2 delivers new KIPs in three different areas of the Kafka ecosystem: Kafka Core, Kafka Streams, and Kafka Connect. @rmoff #ljcjug

US Stream: widgets In stateless mode, you process each event in isolation. But, a stateful stream processor can solve that by materializing an event stream into a persistent view and then updating it as new data comes in. Vorhandene Folgen von Streaming Audio: Apache Kafka & Real-Time Data durchsuchen. ORDERS ksqlDB ORDERS_COMBINED The Tumbling type repeats a non-overlapping interval, while the Bouncing type allows overlaps. What else can ksqlDB do? ORDERS Introduction to ksqlDB, @rmoff #ljcjug First, you can use a pull query, which retrieves results at a point in time. ORDERS_CSV US }

} {ordertime: 1560070133853, orderid: 67, itemid: Item_9, orderunits: 5} ksqlDB Server Event-driven Architecture, DataInMotion, Functional Testing with Loadium & Apache JMeter, HTML Stands for Hyper text markup language, Best Document Generation ToolsQuick Buyers Guide, Imlementing An Array Extension Method in Laravel 8, Deploying Scala Microservices Application on Kubernetes, Designing Data Systems: Complexity & Modular Design, How Sigma streams query results with Arrow and gRPC, Top 5 Reasons for Moving From Batch To Real-Time Analytics, Apache Kafka Landscape for Automotive and Manufacturing.

Introduction to ksqlDB, K & Introduction to ksqlDB, @rmoff #ljcjug In the first part of this series, we learned the fundamentals of materialized views, along with their shortcomings. Kafka cluster B You can increase the processing throughput by adding more stream processors. Also, it is possible to turn a table into a stream as well. @rmoff #ljcjug { id: Item_9, make: Boyle-McDermott, model: Apiaceae, unit_cost: 19.9 Then it is flushed out to disk (local state) periodically. }

Transform data with ksqlDB - split streams US From there, Matthias explains what hot standbys are and how they are used in Kafka Streams, why Kafka Streams doesnt do watermarking, and finally, why Kafka Streams is a library and not infrastructure. CREATE STREAM ORDERS_CSV WITH (VALUE_FORMAT=DELIMITED) AS SELECT * FROM ORDERS_FLAT; Kafka cluster consume

ksqlDB

@rmoff #ljcjug ksqlDB offers two ways for client programs to bring materialized view data into applications. @rmoff #ljcjug The management is interested in the current sales report rather than individual sales. produce ORDERS_FLAT SELECT PERSON, COUNT(*) FROM MOVEMENTS GROUP BY PERSON; +-++ |PERSON | LOCATION_CHANGES | +-++ |robin | 4 1 2 3 | final StreamsBuilder builder = new StreamsBuilder() .stream(widgets, Consumed.with(stringSerde, widgetsSerde)) .filter( (key, widget) -> widget.getColour().equals(RED) ) .to(widgets_red, Produced.with(stringSerde, widgetsSerde)); Stream: widgets_red The following figure shows such an event stream schematically. ksqlDB For instance, in a key/value store. For example, the last value of a column can be tracked when aggregating events from a stream into a table. These aggregations require maintaining a state for the stream. Scaling ksqlDB Introduction to ksqlDB, Kafka Clusters { event_ts: 2020-02-17T22:23:00Z, person : robin, location: Wakefield } { event_ts: 2020-02-18T09:00:00Z, person : robin, location: Leeds } Introduction to ksqlDB, Kafka topic CREATE TABLE PERSON_MOVEMENTS AS SELECT PERSON, COUNT_DISTINCT(LOCATION) AS UNIQUE_LOCATIONS, COUNT(*) AS LOCATION_CHANGES FROM MOVEMENTS GROUP BY PERSON; Introduction to ksqlDB, @rmoff #ljcjug } {ordertime: 1560070133853, orderid: 67, itemid: Item_9, orderunits: 5} Amazon S3 { event_ts: 2020-02-17T22:23:00Z, person : robin, location: Wakefield } { event_ts: 2020-02-18T09:00:00Z, person : robin, location: Leeds } Introduction to ksqlDB, Stateful aggregations in ksqlDB Kafka topic

The following figure shows how a software architecture with Apache Kafka and ksqlDB could look like. You might want to check the current total sales breakdown per store. curl -s -X POST http:#//localhost:8088/query \ -H Content-Type: application/vnd.ksql.v1+json; charset=utf-8 \ -d {ksql:SELECT UNIQUE_LOCATIONS FROM PERSON_MOVEMENTS WHERE ROWKEY=robin;} Thus, subscribers get the constantly updated results of a query, or can retrieve data in request/response flows at a specific time. UK If we have only one instance of the stream processor, soon it will run out of allocated memory and disk space. Introduction to ksqlDB, Interacting with ksqlDB Now that youve seen how a stream processor like ksqlDB materializes a stream into the local state. Especially for Apache Kafka, ksqlDB allows easy transformation of data within Kafkas data pipelines. @rmoff #ljcjug ERP vs MES vs PLM vs ALM What role will they play in industry 4.0? @rmoff #ljcjug @rmoff #ljcjug Introduction to ksqlDB, Stream Processing with ksqlDB Internal ksqlDB state store Introduction to ksqlDB, Stream Processing with ksqlDB Stream: Topic + Schema ++-+-+ |EVENT_TS |PERSON |LOCATION | ++-+-+ |2020-02-17 15:22:00 |robin |Leeds | |2020-02-17 17:23:00 |robin |London | |2020-02-17 22:23:00 |robin |Wakefield| |2020-02-18 09:00:00 |robin |Leeds | Although it introduces additional latency, it works well for simple workloads and provides you good scalability.