The following listing shows the log entries that SimpleConsumer generates when messages are retrieved. The code uses a callback object to process each message that's retrieved from the Kafka broker.

powered by Disqus.

Fortunately, there are client libraries written in a variety of languages that abstract away much of the complexity that comes with programming Kafka. As mentioned at the beginning of this article, the classes published by the Apache Kafka client package are intended to make programming against Kafka brokers an easier undertaking. For example: MAX_POLL_RECORDS_CONFIG: The max countof records that the consumer will fetch in one iteration. All messages in Kafka are serialized hence, a consumer should use deserializer to convert to the appropriate data type. Devglan is one stop platform for all Now open a new terminal at C:\D\softwares\kafka_2.12-1.0.1. Now lets start 3 brokers in 3 separate terminals. In your real application, you can handle messages the way your business requires you to. Please read these instructions carefully before you start.

Messages are serialized before transferring it over the network. Execute .\bin\windows\kafka-server-start.bat .\config\server.properties to start Kafka. As long as the callback object publishes a method that conforms to the method signature defined by the pre-existing interface KafkaMessageHandler, the code will execute without a problem. Over 2 million developers have joined DZone.

The callback object is passed as a parameter to the runAlways() method. This command will have no effect if in the Kafka server.propertiesfile, ifdelete.topic.enableis not set to be true. Spring Jms Activemq Integration Example. redis kafka hadoop Guest blogging at Data Nebulae is very simple. It will keep this metadata up to date through out its life cycle.

Putting the message processing behavior in a place different from where the actual message retrieval occurs makes the code more flexible overall. CLIENT_ID_CONFIG:Id of the producer so that the broker can determine the source of the request. By default, kafka used Round Robin algo to decide which partition will be used to put the message.

Using the synchronous way, the thread will be blocked until an offsethas not been written to the broker. Technical Skills: Java/J2EE, Spring, Hibernate, Reactive Programming, Microservices, Hystrix, Rest APIs, Java 8, Kafka, Kibana, Elasticsearch, etc.

In another terminal window, go to the same directory and execute the following command: You should see a steady stream of screen output. YouTube | They use the message offset to maintain the last read position when consuming messages from a common topic. Then you need to run the consumer, which will retrieve those messages and process them. The class that encapsulates the Kafka consumer is named SimpleConsumer. If you're new to Kafka and Java concepts, be sure to read the previous installment,A developer's guide to using Kafka with Java. The send() method uses the values of its key and message parameters passed to create a ProducerRecord object. These are the most important points about the Kafka producer implementation. Added this dependency to your scala project.

Since, we are going to start up 3 brokers, we will need 3 separate conf/server.properties.

In the demo topic, there is only one partition, so I have commented this property. A developer's guide to using Kafka with Java, Part 1, Building resilient event-driven architectures with Apache Kafka. All read and write to a topic goes through the leader, and the leader coordinates updating the replicas. Before you can run the demonstration project, you need to have an instance of Kafka installed on the local computer on which the project's code will run. bin/kafka-console-producer.sh andbin/kafka-console-consumer.sh in the Kafka directory are the tools that help to create a Kafka Producer and Kafka Consumer respectively. Topics are named feed which span over an entire cluster of brokers.

Below is the command to execute in a terminal window to get Kafka up and running on a Linux machine using Docker: To see if your system has Docker installed, type the following in a terminal window: If Docker is installed, you'll see output similar to the following: If the call to which docker results in no return value, Docker is not installed. We will use the topic name "javaguides" in this example.

Which one it starts will depend on the values passed as parameters to main() at the command line. Using file.rename(), R Data Frame Tutorial | Learn with Examples. ENABLE_AUTO_COMMIT_CONFIG: When the consumer from a group receives a message it must commit the offset of that record. The serialize are set for the value for the key and value both. Get started developing with Red Hat OpenShift Streams for Apache Kafka, a managed cloud service for architects and developers working on event-driven applications. A consumer can consume from multiple partitions at the same time. AUTO_OFFSET_RESET_CONFIG:For each consumer group, the last committed offset value is stored. Extract Kafka zip in the local file system. SparkByExamples.com is a Big Data and Spark examples community page, all examples are simple and easy to understand and well tested in our development environment, SparkByExamples.com is a Big Data and Spark examples community page, all examples are simple and easy to understand, and well tested in our development environment, | { One stop for all Spark Examples }, Click to share on Facebook (Opens in new window), Click to share on Reddit (Opens in new window), Click to share on Pinterest (Opens in new window), Click to share on Tumblr (Opens in new window), Click to share on Pocket (Opens in new window), Click to share on LinkedIn (Opens in new window), Click to share on Twitter (Opens in new window). KafkaConsumer then subscribes to the topic. The above snippet contains some constants that we will be using further. The retention period is configurable and can be configured per topic. Making send() protected in scope is a subtle point, but it's an important one from the point of view of object-oriented programming.  The slowest performance. In this learning path, youll sign up for a free Red Hat account, provision a managed Kafka instance, and connect to it using service account credentials via SSL.

The slowest performance. In this learning path, youll sign up for a free Red Hat account, provision a managed Kafka instance, and connect to it using service account credentials via SSL.

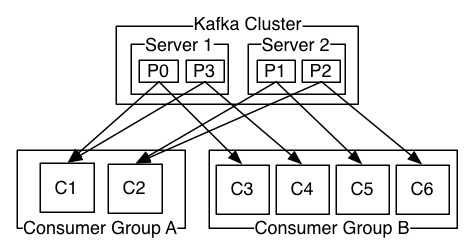

GitHub. A Kafka cluster consists of one or more brokers(Kafka servers) and the broker organizes messages to respective topics and persists all the Kafka messages in a topic log file for 7 days. A Kafka topic can be divided into one or more partitions, and the number of partitions per topic is configurable. GitHub,

Use tab to navigate through the menu items. Logic in AbstractSimpleKafka does the work of intercepting process termination signals coming from the operating system and then calling the shutdown() method. Key Mod-hash: Holds a map of key to partition, and all messages with the specified key goes to the defined partition. If you are facing any issues with Kafka, please ask in the comments. Below are the String Serializer. The name of the topic is passed as a parameter to the runAlways() method. The actual work of continuously getting messages from the Kafka broker and processing them is done by the method runAlways(). Kafka topics provide segregation between the messages produced by different producers. In this tutorial, we use Kafka as a messaging system to send messages between Producers and Consumers.

It will send messages to the topic devglan-test. Zookeeper and Kafka services should be up and running. Kafka cluster has multiple brokers in it and each broker could be a separate machine in itself to provide multiple data backup and distribute the load. Assuming that you have jdk 8 installed already let us start with installing and configuring zookeeper on Windows.Download zookeeper from https://zookeeper.apache.org/releases.html.

If a leader fails, a replica in the list is assigned as the new leader. In a microservice architecture, when an event happens within a service, other dependent services has to be notified about it to update their state or to perform an action. Technical expertise in highly scalable distributed systems, self-healing systems, and service-oriented architecture. of brokers and clients do not connect directly to brokers. Once this is extracted, let us add zookeeper in the environment variables.For this go to Control Panel\All Control Panel Items\System and click on the Advanced System Settings and then Environment Variables and then edit the system variables as below: 3.

Thank you. The processing behavior of the consumer is to log the contents of a retrieved message.

key.serializer=org.apache.kafka.common.serialization.StringSerializer.

Next, you'll see how the class SimpleConsumer retrieves and processes messages from a Kafka broker. This Properties class exposes all the values in config.properties as key-value pairs, as illustrated in Figure 2. Kafka stores the committed offset in a topic called __consumer_offsets. Prerequisites: If you dont have the Kafka cluster setup, follow the link to set up the single broker cluster. Thanks for reading the article and suggesting a correction. Thus, both the Java Development Kit and Maven need to be installed on the Linux computer you plan to use, should you decide to follow along in code. Declaring shutdown() and runAlways() as abstract ensures that they will be implemented. If you are new to Apache Kafka then you should check out my article -Apache Kafka Core Concepts. Both SimpleProducer and SimpleConsumer get their configuration values from a helper class named PropertiesHelper. Now, start all the 3 consumers one by one and then the producer.

Now each topic of a single broker will have partitions. You can create your custom partitioner by implementing theCustomPartitioner interface. The location of the Kafka broker is defined in the config.properties file. 1. Ideally we will make duplicate Consumer.java with name Consumer1.java and Conumer2.java and run each of them individually. Before starting with an example, let's get familiar first with the common terms and some commands used in Kafka. The behavior of the callback object's processMessage() method is unknown to runAlways().

If your value is some other object then you create your customserializer class. It can handle hundreds of thousands, if not millions of messages a second. Control Panel\All Control Panel Items\System, "org.apache.kafka.common.serialization.StringSerializer", "org.apache.kafka.common.serialization.StringDeserializer".

Both SimpleProducer and the testing class, SimpleProducerConsumerTest, are part of the same com.demo.kafka package. All that's required to get a new instance of KafkaProducer that's bound to a Kafka broker is to pass the configuration values defined in config.properties as a Properties object to the KafkaProducer constructor.

It covers the basics of creating and using Kafka producers and consumers in Java. The messaging can be optimize by setting different parameters. The config.properties file is the single source of truth for configuration information for both the producer and consumer classes. A Kafka producer is instantiated by providing a set of key-value pairs as configuration.The complete details and explanation of different properties can be found here.Here, we are using default serializer called StringSerializer for key and value serialization.These serializer are used for converting objects to bytes.Similarly,devglan-test is the name of the broker.Finally block is must to avoid resource leaks.

This message contains key, value, partition, and off-set. Each line of code has a purpose.

By new recordsmean those created after the consumer group became active.