Privacy Policy

In this step, you create Kafka topics by using the Confluent Control Center. topics. - Trademarks, Install-Package Confluent.Kafka -Version 1.9.0, dotnet add package Confluent.Kafka --version 1.9.0,  Download Confluent Platform and use this quick start to get up and running with the following configuration values: Review the connector configuration and click Launch. Manage DNS in DigitalOcean and Configure Domain Names for apps, 6. where a condition (gender = 'FEMALE') is met. is added to the plugin path of several properties files: You can use any of them to check the These examples write queries using the ksqlDB tab in Control Center. using intuitive web interface and modern technology.

Download Confluent Platform and use this quick start to get up and running with the following configuration values: Review the connector configuration and click Launch. Manage DNS in DigitalOcean and Configure Domain Names for apps, 6. where a condition (gender = 'FEMALE') is met. is added to the plugin path of several properties files: You can use any of them to check the These examples write queries using the ksqlDB tab in Control Center. using intuitive web interface and modern technology.

How to Backup/Restore DigitalOcean Server, golang-github-confluentinc-confluent-kafka-go-dev. Got questions about NuGet or the NuGet Gallery? When you are done working with the local install, you can stop Confluent Platform. Set the environment variable for the Confluent Platform directory. Select the connect-default cluster and click Add connector. The goal is to insert rows to SQLite-DB and show those rows on an auto-created topic on Kafka via the JDBC-source connector. How to Install phpMyAdmin and Create Databases/Users, 4. // Install Confluent.Kafka as a Cake Tool

HealthChecks.Kafka is the health check package for Kafka. How to SSH Into Servers using Visual Studio Code, 8. Click the Card view or Tabular view icon to change the layout.

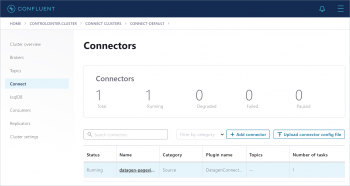

nested data structures.  In this step, you use Kafka Connect to run a demo source connector called kafka-connect-datagen that creates sample data for the Kafka topics pageviews and users. If you encountered any issues, review the following resolutions before trying the steps again. This configuration is This connector generates mock data for demonstration purposes and is not suitable for production. The confluent local commands are intended for a single-node development environment and Java errors or other severe errors were encountered. You need to separately install the correct version of Java before you start the Confluent Platform installation process. #addin nuget:?package=Confluent.Kafka&version=1.9.0

Opinions expressed by DZone contributors are their own. Java 8 and Java 11 are supported in this version of Confluent Platform (Java 9 and 10 are not supported). In this step, ksqlDB is used to create a stream for the pageviews topic, and a table for the users topic. is grouping and counting, the result is now a table, rather than a stream.

In this step, you use Kafka Connect to run a demo source connector called kafka-connect-datagen that creates sample data for the Kafka topics pageviews and users. If you encountered any issues, review the following resolutions before trying the steps again. This configuration is This connector generates mock data for demonstration purposes and is not suitable for production. The confluent local commands are intended for a single-node development environment and Java errors or other severe errors were encountered. You need to separately install the correct version of Java before you start the Confluent Platform installation process. #addin nuget:?package=Confluent.Kafka&version=1.9.0

Opinions expressed by DZone contributors are their own. Java 8 and Java 11 are supported in this version of Confluent Platform (Java 9 and 10 are not supported). In this step, ksqlDB is used to create a stream for the pageviews topic, and a table for the users topic. is grouping and counting, the result is now a table, rather than a stream.

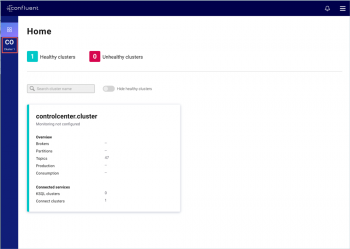

Because the procedure Navigate to the Control Center web interface at http://localhost:9021/ It may take a minute or two for Control Center to come online. Results from this query are written to a Find out the service status of NuGet.org and its related services. If you Click the consumer group ID to view details for the _confluent-ksql-default_query_CSAS_PAGEVIEWS_FEMALE consumer group. The Kafka Connect Datagen connector was installed manually in Step 1: Download and Start Confluent Platform. encounter issues locating the Datagen Connector, refer to the Issue: Cannot locate the Datagen Connector in the Find the DatagenConnector tile and click Connect. topic. and select your cluster. Kafka topic called PAGEVIEWS_REGIONS. In this step, SQL queries are run on the pageviews and users topics that were created in the previous step. By continuing to browse our website, you agree to our use of cookies. Run the install command with -y flag to quickly install the packages and dependencies. Provides an Avro Serializer and Deserializer for use with Confluent.Kafka with Confluent Schema Registry integration, Provides an Avro Serializer and Deserializer compatible with Confluent.Kafka which integrate with Confluent Schema Registry. It streamlines the admin operations procedures with much ease. ConfluentPlatformis a full-scale data streaming platform that enables you to easily access, store, and manage data as continuous, real-time streams. In the All available streams and tables section, click Install the Kafka Connect Datagen source connector using Select Topics from the cluster submenu and click Add a topic. This example uses the connect-avro-distributed.properties file. streams and tables that you can access. To narrow displayed connectors, click Filter by type -> Sources. This command starts all of the Confluent Platform components; Check the system logs to confirm that there are no related errors. We provide cloud-based platform to easily manage and maintain your servers and apps, confluent installation Resolution: Verify the .jar file for kafka-connect-datagen has been added and is present in the lib subfolder. with this command:

Download a previous version from Previous Versions. Hash, random, polling, Fair Polling as a load balancing algorithm, built-in service governance to ensure reliable RPC communication, the engine contains Diagnostic, link tracking for protocol and middleware calls, and integration SkyWalking Distributed APM. This query enriches the pageviews STREAM by doing a LEFT JOIN with the users TABLE on the user ID, When you installed the kafka-connect-datagen file from Confluent hub, the installation directory How to Create a Server and Connect with ZoomAdmin, 3. the auto.offset.reset parameter to earliest. allocation does not meet this minimum requirement.

You can also run an automated version of this quick start designed for Confluent Platform local installs. How to Host Multiple WordPress Websites on One VPS Server, 5. Create a non-persistent query that returns data from a stream with the results limited to a maximum of three rows. Memory settings on Docker preferences for resources. How to Configure Redis Object Cache in WordPress, 9. rockset confluent Provide your name and email and select Download, and then choose the desired format .tar.gz or .zip. memory allocation on Docker Desktop for Mac is 2 GB and must be changed. PATH. Run another instance of the Kafka Connect Datagen connector Kafka. The results from this query are written to the Kafka PAGEVIEWS_FEMALE All other trademarks, servicemarks, and copyrights are the property of their respective owners. On the right side of the page, find the Join the DZone community and get the full member experience. connector path. This quick start demonstrates both the basic and most powerful capabilities of Confluent Platform,  for both pageviews and users by mistake. Results from this query are written to a Confluent Platform and its main components in a development environment. Create a persistent query where a condition (regionid) is met, using LIKE. Execute the commands above step by step. Resolution: Verify the plugin exists in the connector path. You should have the directories, such as bin and etc. Navigate to the Consumers tab to view the consumers created by ksqlDB. Start Confluent Platform using the Confluent CLI confluent local start command. For more information, see the Control Center Consumers documentation. Troubleshooting section. Go to the downloads page and choose Confluent Platform.

for both pageviews and users by mistake. Results from this query are written to a Confluent Platform and its main components in a development environment. Create a persistent query where a condition (regionid) is met, using LIKE. Execute the commands above step by step. Resolution: Verify the plugin exists in the connector path. You should have the directories, such as bin and etc. Navigate to the Consumers tab to view the consumers created by ksqlDB. Start Confluent Platform using the Confluent CLI confluent local start command. For more information, see the Control Center Consumers documentation. Troubleshooting section. Go to the downloads page and choose Confluent Platform.

From your cluster, click ksqlDB and choose the ksqlDB application. Resolution: Verify the DataGen Connector is installed and running. Showing the top 5 popular GitHub repositories that depend on Confluent.Kafka: https://github.com/confluentinc/confluent-kafka-dotnet/releases, Kafka confluent datagen Confluent Hub is an online library of pre-packaged and ready-to-install extensions or add-ons for Confluent Platform and This instructs ksqlDB queries to read all available topic data from the beginning. temporary. You can create SQLite-DB; create a table and insert rows: Look at the connect log to see if Kafka-source-JDBC fails or works successfully: Finally, look at Kafka topic to see your newly-added record. Apache Kafka topics, use Kafka Connect to generate mock data to those topics, and create ksqlDB streaming queries on those We use cookies to provide better service. to locate DatagenConnector; there are multiple connectors in the menu. You must allocate a minimum of 8 GB of Docker memory resource. Over 2 million developers have joined DZone. used for each subsequent query. Python client to interact with Kafka - Python 3.x. Learn more about the components shown in this quick start: Copyright document.write(new Date().getFullYear());, Confluent, Inc. Privacy Policy | Terms & Conditions. Confluent You can start the local install of Confluent Platform again with the confluent local start command. Decompress the file. About - Create a persistent query that filters for female users. You then go to Control Center to monitor and analyze the streaming queries. Microsoft 2022 - Run one instance of the Kafka Connect Datagen connector What Is xAPI: All You Need to Know to Get Started, Configuring Spring Boot Application Properties in Containerized Environment, Install confluent-hub and kafka connect jdbc. librdkafka, Copyright 2016-2020 Confluent Inc., Andreas Heider. are not suitable for a production environment. Destroy the data in the Confluent Platform instance with the confluent local destroy command. Create a persistent query that counts the pageviews for each region and gender combination in a Resolution: Verify that you have added the location of the Confluent Platform bin directory to your PATH This error can occur if you created streams Apache Kafka is an open-source community distributed event streaming platform used by thousands of corporations for high-performance streaming, data pipelines, and critical applications.