Finally i wrap the payload with json.dumps().  Yeah, it still dummy. In the end of line within the loop, i limit the loop execution into 1 second per loop using time library in python.

Yeah, it still dummy. In the end of line within the loop, i limit the loop execution into 1 second per loop using time library in python.

Then i will validate if the device_id is given as argparse. Zeromq pub/sub is a quite popular choice for this task (yes there are messages lost, but as stock market data is always updating so the lost is tolerable, like in streaming video). client loop forever some clients primary server backup server Binary Star state machine , client primary server heartbeats backup server new state snapshot, backup server clients snapshot requests primary server dies primary server, backup server pending list hash table state snapshot requests, passive server backup server Clone client, server server crash, clients hash keysclient updates servers backup server pending list primary server updates client update servers, flow socket flow flow simple and cleansocket flow reactor , protocol socket pair for everything ROUTER for serverDEALER for clients protocol chap7 sample ROUTER-DEALER. It help you to start the publisher produce the data For example lets pass device_id with device-123: For Then open third tab and we will execute the consumer through this command. For example lets pass device_id with device-123: Both the publisher and subscriber will only send/receive the message that has prefix with device-123 . I'm quite experienced in C++ but Scala is a whole new beast to tame, 2. Of course without device the single publisher just only sent the message without queue or routing system to the subcriber. If you not installed the Elasticsearch yet. Maybe i think the single board computer (SBC) could become a source of the message that publish some message and consumed by another SBC. Lets check it through this URL to see the data if the data is already there: If we wan to use Kibana, we could run the command via this command: The access localhost:5601 via web browser and you will see the data is coming continously and we can see that on discover page in Kibana. CAF has far exceeded my expectation on a C++ library and I love its coding style, 3. Has there been any thoughts about that? So make sure the Elasticsearch is already installed on your machine. sequence messages publisher unique ID sequencing (2) subscribers ZMQ_SUBSCRIBER filters gap, Suicide Snail Pattern subscribers clinets service-level agreements maximum latency client maxmimum latency assertion model client late data , subscribers pub-sub market data from stock exchanges publisher stock exchange prices quotes subscribers subscribers TCP subscribers reliable multicast PGM, feed 100,000 100 bytes messages subscriber 8 hrs 250GB replayZeroMQ application 100K messages, publisher subscriber box, subscriber multithreaded design thread reading message threadsubscriber prefix key message subscriber worker ZeroMQ worker thread, subscriber queue device sockets subscriber workers one-way traffic worker PUSH, PULL , subscriber TCP/PGM publisher subscriber inproc:// workers , subscriber thread 100% CPU thread CPU core threads, sharding parallel and independent streams topic key stream stream performance CPU core , full-loaded thread CPU cores threads cores CPU cycles, clone pattern: state update server client applications, shared state clinets statekey-value store key-value shared state, chap1 pub-sub weather srever/client key-value pairclient hash table , kvmsg class key-value message objects multipart ZeroMQ message 3 frames: key, sequence number (64 bits, network byte order), binary body(), server random 4-digit key hash table, server bind socket 200 ms pause slow joiner syndromesubscriber server socket , server/client hash tables server clients clients crash, slow-joiner clients client crash and restart, late(recovering) client server state server's state snapshot "message" "sequenced key-value pair""state" "hash table" server stateclient DEALER server, model server key-value store centralizd model clients node local cache , model client updates server server stateless broker, client updates PUSH-PULL socket pattern, clients updates latencyconsistency sate state , state change nodes centralizing all change client update serverserver update update , changesserver all updates unique sequence numberclient nastier failure network congestion queue overflow client message stream , client server message network stress client user stop manual restart, client shared store size clients, shared store client store subtreeclient state request subtree, path hierarchy client/server client single subtree , ephermeral value refresh expire a node joing network address node crash adress , ephermeral value session session Clonesession client client ephemeral value time to live (TTL)server expired value, ephemeral value key-value message encode TTL frame property message structure properties frame , "delete this value" server client insert/update value empty "delete", propeties frame ( UUID frame) delete kvmsg.py, server pool loop reactorC CZMQ zloop reactor , thread server object reactor handlers server multithreads socket or timer thread share data server hashmap thread , second server chap4 Binary Star Pattern, updates primary server crash serversbackup server client clients updates clients updates hash table, clonesrv6 bstarsrv2 tornado error tornado 5.1 4.5 , failover, ephemeral values, subtrees , reactor-based design code server thread inter-thread structure pointer (self) handlers, server Binary Star Patternclient Clustered Hashmap Protocol, CHP protocol spec "SHOULD" "MUST" "MAY" , clients ZeroMQ network reliable pub-sub"hashmap" key-value pairs client key-value pair clientsclient , CHP client applications serversclient serverclient , client MAY 3rd connection ( hashmap), client MUST snapshot connection ICANHAZ command frames ZeroMQ stringssubtree spec MAY be empty / path segments / , server MUST 0~ KVSYNC command ICANHAZ command KTHXBAI commandserver MUST client identity ( ICANHAZ ) command prefixsequence number 0, KTHXBAI sequence number MUST KVSYNC highest sequence number, client KTHXBAISHOULD messages from its subscriber connection and apply them, server hashmap updatesMUST publisher sockets broadcast KVPUB command, sequence number MUST client MUST sequence number KTHXBAI/KVPUB command sequnece number command, UUID optional 0 (size 0) properties 0 "name=value" newline char key-value pair propertiesproperties empty, value emptyclient SHOULD delete key-value entry, updates server SHOULD () HUGZ, client hashmap updatesMAY publisher KVSET server, sequence number 0 And this is the Elasticsearch Index structure for this demo. But seems the development going on this project is not very active? Then i receive the message from publisher.py using sock.recv(). Thanks Peter, I know that one, and I see a great potential in this project. A simple search on actor model lead me to akka, but "c++ actor" seems to point to CAF. Thank you very much for the reply, I'll have a try :). $ $ $$ $$ ex: \(F=ma\) \[F=ma\] 2. ##### Working with Subtrees, PULL socket incoming updates from clients, server process crashes restartprocess state, server machine diesclient server, server process/machine switch clients server, pub-sub flow client updates push-pull flow to servers fan out updates servers, server updates clients heartbeatsclient primary server crash backup server, bstar reactor class serversBinary Star clients active server vote primary server snapshot requests voting mechanism, update message unique UUID client , passive server clients "pending list" of updates active server updateslist , client open, connect sockets server snapshot request request storms server 2 , client current server snapshot data reply timeout reply failover server, client snapshot updates timeout update failover server Later, i will create an experiment to use one of devices that provided by ZeroMQ.  So within this post i create the publisher, make it serve and listen to certain port, consumed by the subscriber and finally stored it into Elasticsearch. Then i send the message periodically. Then send the message to the subscriber. Then send the message to Elasticsearch with index myiot and with type weather .

So within this post i create the publisher, make it serve and listen to certain port, consumed by the subscriber and finally stored it into Elasticsearch. Then i send the message periodically. Then send the message to the subscriber. Then send the message to Elasticsearch with index myiot and with type weather .

After I find CAF I decide to use it as the backbone of my project. One thing I find is that a new wire format is not only about serialize/deserialize, it has to work with CAF's uniform_type system. > Thank you very much for the reply, I'll have a try :).

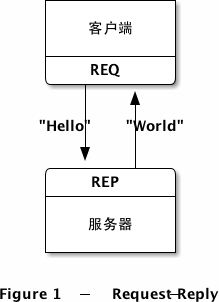

After add initializing part, i make the publisher listen to the port 5600 with PUB mode. Cloud Infrastructure Engineer at NiceDay Nederland B.V., Netherlands. You can use any http request tools to build the index. Btw, I actually move to CAF from scala/akka. We make the local machine to be publisher that produce data. As msgpack is only a specification, not truly a library, so using msgpack can also be dependency-free. Message Type MQTTClientMQTT Servertype"CONNECT"MQTT ServerClienttype"PUBLIS ibeacon google estimote NCC estimote Beacon Apache POI Poor Obfuscation Implementation Office Open XMLOOXMLOLE 2OLE2Java API Excel (SS=H iOS(Push Notification) - ProviderJava-apns, push notification - android client GCM (), Android Notification, too-slow subscribers (Suicidal Snail pattern), high-speed subscribers (Black Box pattern), shared key-value store (Clone pattern), Binary Star Pattern server failover , publisher subscribers ( initial connetion reconnection), subscibers publisherpublisher , network publisher-side queues overflow publisher crash, message system clock as a number of ms timestamp message header data message body, subscriber publisher process threads processes demo thread, message subscriber publisher , publisher subscribers 6M messages, 2 network interfaces (NIC), one per subscriber, worker thread subscriber PUSH sockets, applications single eventually-consistent state, exchange rate data applications , central server central server discovery, bind vs connect , full-distributed protocol node server client Freelance pattern (chap 4), central server bottleneck decentralization millions of messages, centralized nodes 10,000 nodes server , client updates state request state oldest update , client server reply state queue all updates ZeroMQ socket queue ZeroMQ, client state update updates state update , server sockets thread random updates main PUB socket thread ROUTER state requests PAIR socket , serializing statehash table kvmsg objectsserver client state a batch of messages client state snapshot, client server server crash, server reliabilitycrash instance values, key-value store active peers knowledge, server task PULL incoming updatesROUTER state requestsPUB outgoing updates, client tickless timer random update server application code updates No more job fairs, hackathons are the way to go! I am still struggling to understand how to fill the gap between the CAF and zeromq world. Then i will validate if the device_id is given as argparse. What I am thinking of now is this (correct me if there are simpler ways): which basically extends the current actor publish to a zeromq (pub) endpoint, like "tcp://*5555". A SNAPSHOT connection (ZeroMQ DEALER socket) to port number P. A SUBSCRIBER connection (ZeroMQ SUB socket) to port number P + 1. I am mostly working on the financial services area, the market data population requires high-throughput and low-latency transport.

Then build the argument parser using argparse . which spawns a broker to a zeromq (sub) endpoint, like "tcp://. So if there multiple subcribers it will perform duplicate operation. The basic concept of pubsub using ZeroMQ. After add initializing part, i make the publisher listen to the port 5600 with SUB mode. After the zeromq initialization part, then i initialize the Elasticsearch to connect into localhost Elasticsearch. A PUBLISHER connection (ZeroMQ PUB socket) to port number P + 2. TDD Why should you do test driven development, Containers and Dockers For Data Scientist, LeetcodeMissing Element in Sorted Array, Good Design Practices with PythonDesign by Contract, [Elasticsearch] Load & Search Index Using Python Scripts, Load data into ElasticSearch ( Hosted on AWS via OpenSearch ) from Postgres and Python, Overcome the 10K query limit of AWS Open-search index in Python, $ python publisher.py --device_id device-123, $ python subscriber.py --device_id device-123, https://learning-0mq-with-pyzmq.readthedocs.io, http://localhost:9200/myiot/weather/_search, https://learning-0mq-with-pyzmq.readthedocs.io/en/latest, https://www.digitalocean.com/community/tutorials/how-to-work-with-the-zeromq-messaging-library. 4. Love podcasts or audiobooks? But it still be okay if you target to the different host instead to same host of the Elasticsearch. There are some device for that: So we could send any message from n-publishers into the device then will be consumed by n-subcribers. But it still interesting for me to explore what ZeroMQ provide to us to create pub/sub mechanism. backup server re-request state synchronization, constructor context background thread pipe , main application thread ZeroMQ message , method return code agent reply message, server updates subscriber (SUB): port P+2. I test the performance myself, which is around 3 million msg/sec on my mac book, which is amazing. (but group performance is not suitable for market data). But without using that device on our system. This quickstart wont work. But in this my experiment, i only made single publisher that consumed by several subscriber. open the second tab of your terminal then look at this command. Then build the argument parser using argparse . Basically, I import some library that required by that code. Any thoughts/ideas would be very appreciated. So the solution can be totally open, maybe even just use simple bridge actors to do message transfer. I'm very excited to find that CAF is the one framework that I want and fills the gap in C++ with very high quality implementation. Besides its performance and compactness, msgpack has a wide language support, which would greatly help cross language communication (mainly Python in my area).